Aligning with management

Recently, I was discussing test leadership with an experienced practitioner in the agile testing space 👋. The topic of aligning with management came up again. I skipped the topic then. But afterward, I kept pondering the question: How can we get better management buy-in for testing?

Recently, I was discussing test leadership with an experienced practitioner in the agile testing space (Hi Y'all👋). The topic of aligning with management came up again. I skipped the topic then. But afterward, I kept pondering the question: How can we get better management buy-in for testing?

The realm of testing is to worry.

Testing professionals worry. Part of it is our occupational habits of spotting failures and striving for perfection. Part of it is the grind of delivering IT solutions with limited staffing and a narrow quality mindset. One recent example is Paul Mowat, who writes: What really worries me is that quality is not even on the CEO's agenda, and is not captured well in the Cloud Strategy, where it does, you will find it somewhere within DevOps. [https://linkedin.com/feed/update/urn:li:activity:7202963969811062784/].

And he is indeed right. In building software solutions in the cloud, most things that need testing can and will be automated. The more explicit and low-level a work package is, the easier it is to confirm. Even infrastructure-as-code can be verified automatically and repeatedly. You might find that external integrations are the key challenge. Automation for variations and permutations is trivial if your interface has a clear specification. Low-level (Unit) testing is indeed in the "Automation zone": These tasks are highly process-oriented, relying on repetitive procedures like checking for bugs across different platforms. [https://medium.com/@malcolm_78512/missing-the-boat-why-it-services-leaders-are-struggling-in-the-ai-boom-41035a266ebb]

Obviously, there is plenty in a non-trivial system that cannot be automated. Some things aren't yet known. Other things are not yet so routine that they can be explicitly stated. Some are cumbersome to automate compared to their recurrence. And a whole range of things depends on intuition and people. My favorite requirement is still for a dashboard to "give a good overview of the tickets." But that would be for the end users to evaluate.

Unfortunately, just these few examples make some testing professionals worry about their job security. If testing is either end-users or automation, where do we shine? What is the future of the tester? It seems to me that we understand this from the wrong side—that we are "in everything"—and not across.

There is plenty to align with management about

- According to the latest “What CEOs talked about” report, three themes gained noticeable traction in Q1 2024: 1) AI, 2) sustainability, and 3) elections.

- According to the Gartner 9 future work trends report, the topics could be the Cost-of-work crisis, AI opportunities, Four-day work weeks, Employee conflict, Skills vs Degrees, Climate change, DEI, and battling job stereotypes

- any of the McKinsey The State of Organizations 2023: Ten shifts transforming organizations

Maybe your CEO or top manager wants to be seen doing "something" about any of them. Maybe your CEO wants to align with her stakeholders in delivering the somewhat random business targets from before the economy, office market, and job market went totally off the charts. If you can tap into that, you will be on the right path to align with her. For instance, I know we struggle to staff our test leadership roles. Part of what I do is bridging the skills vs degrees challenge.

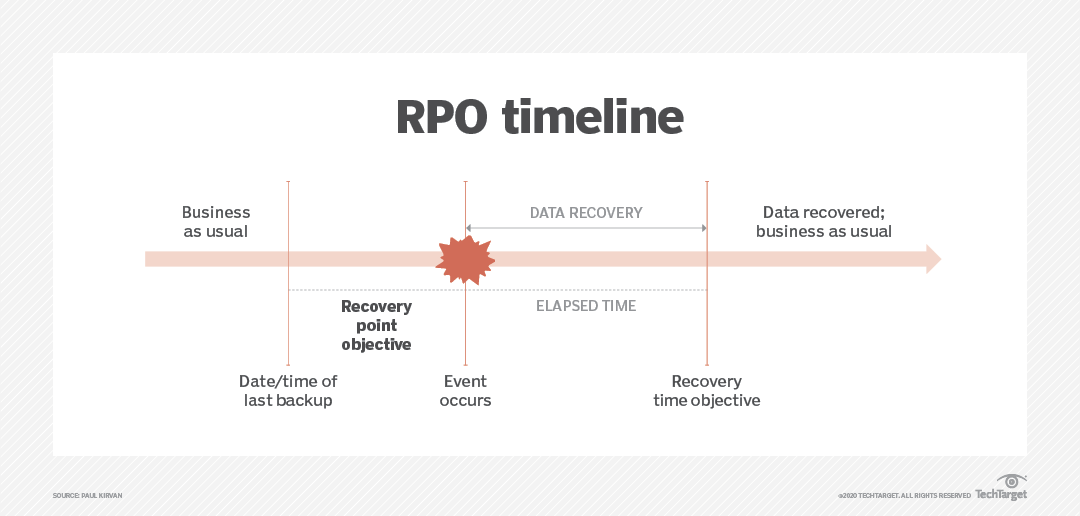

One thing that never goes out of business for the business is resilience towards unforeseen events. With cyber threats and climate change in the mix - it's a matter of time before something hits you. Try to articulate your testing around two topics:

- If an unforeseen event occurs, how quickly can we recover technically

- If an unforeseen event occurs, how quickly can we recover business operations

Use your testing worry and communication to frame your testing on these two seemingly ancient backup terms (RTO and RPO, drawing below) with questions like: How fast is the return time on your releases? How much business data is in transit in your system? What happens to orders that are halfway processed? What if external integrations are down? How do we process previously submitted data?

All these questions are testing questions. And yes, eventually, you can automate them, but initially, you have to align with management if it's important for them to have a running business. It probably is.