To go fast - Go slow

We need to be better a confirming the requirements consistently and continuously - also the compliance and non-functional requirements. The best way to do this is to go slow and build instrumentation along the way.

Requirements are not something to be confirmed only once. Very few requirements mention that this should only work during the construction of the system. We need to be better a confirming the requirements consistently and continuously - also the compliance and non-functional requirements. The best way to do this is to go slow and build instrumentation along the way.

Often when we look at requirements in a project delivery, the developers think it turns of getting it done and the testers too often think about investigating it - once. The consideration is that this has to work only once (in sprint or project), and then we are all good. That is unfortunately usually wrong. The requirements must be fulfilled both during construction and throughout the product's lifespan - again and again.

And if it's management dictating just to move along and check everything once during construction only. Send them this way! There is more to success than just shipping stuff.

This is especially a challenge of the non-functional and compliance requirements. The compliance requirements though are often the company's "license to operate" so failing them can have as dire consequences as any functional failure. It's simply not enough that the solution works only once. Or that we aren't certain if the solution has been tampered with since we built the thing. I'm currently working with a team that is building a solution that interfaces with a large regional financial institution. How can we not need to be able to confirm the solution consistently and have the prod environment under consistent change control?

Solving the Core Conflict

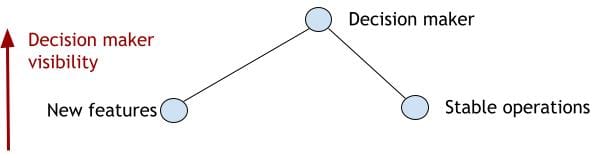

The original DevOps Handbook [IT Revolution, 2016] framed this as the challenge of both building for the sprint/project and running for the future:

“the chronic conflict of pursuing both: responding to the rapidly changing competitive landscape for features and platforms while maintaining business as usual”

While we might be so feature-focused that we need to react to the market as a top priority, few companies can neglect to consider stable operations - now and in the future. The consistently best way to keep the business running is consistent (test) automation and instrumentation. Instrumentation to detect if things go wrong, but also to have automation that can react and recover services swiftly. Multiple examples and studies show that the best way to go fast is to go slow and build stability and consistency from the start. While it might seem counterproductive to some fast-track developer types, being diligent is a benefit to them too in less rework and firefighting.

Plan your testing for repeatability

Have a good look at your testing! How much of it is prepared for repeatability? Sure some of the requirements are best explored using brain power, but I'm sure that after exploring the same thing a few times it can be put on a form to enable repeatability and automation. Perhaps you can even optimize from what was once an informal exploration to something that is run so often that it becomes a commodity to the team. Consider advancing your pet-project session charters or hunch-based testing towards repeatable activities to be repeated often and consistently. Test automation is also where you set in for more combinations and variations in your testing.

I recently did a project where we were testing an HTTP API. After a few tinkerings in a Postman HTTP-test client, we built C# to generate and execute all the possible combinations relevant. We did not need to reduce combinations as we did when we hand-crafted payloads in Postman. Also having coded the payload generation, it was clearer to remember to update the test (code) when the API code changed.

Recent global cloud infrastructure breakdowns, though, have taught us that even if you do have build processes, tooling and pipelines - things can still break catastrophically. If you are not rolling out gradually and do not have procedures in place to recover from failure - all the green test cases cannot help you. Your solution needs to be recoverable within a reasonable time. One way to do this is to build in small tests and recovery actions for all requirements - not only the "functional."

- Deliver documents in smaller increments. These days even Word have built-in versioning and track-changes.

- Build in checks in Cloud and Infrastructure as code projects

- Build checks in your low-code test automation

The biggest obstacle is the imagination of how to set up the tests and controls. This is where a testing mindset shines - imagination that things could be different. Next up is the opportunity and willingness for change.